Context Is King: Building an AI System That Lives, Learns, and Compounds

Hey there,

I had a moment last month that felt like magic.

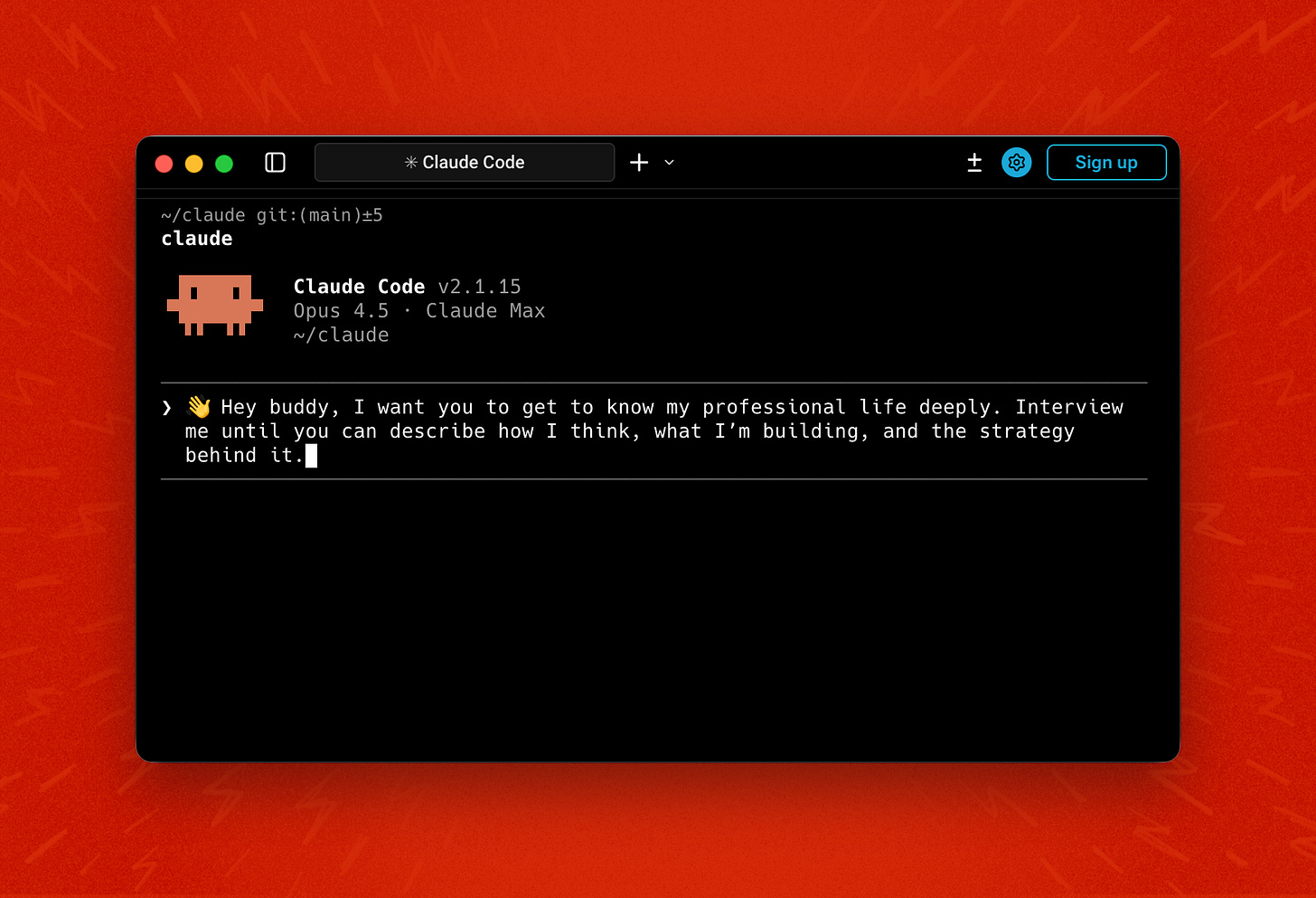

I was making sense of two years of AI learning and practice when I started working with Claude Code. Screenshots, notes, audio recordings. It asked questions. I answered. We discussed, organized, decided.

The magic wasn’t that AI did the work. It was the flow from thinking to screen. Claude knew me because I’d been feeding it context. That’s when it clicked.

We used to say content is king.

Today, context is king.

Teresa Torres calls this “playing at the edge of AI,” exploring what’s possible and what will shape the future. She’s spot on.

But this article is for the majority who aren’t deep in the tech. The same concepts will spread to more accessible tools soon, and the thinking will matter far more than the tools.

No coding skills are needed to build systems like this. You need clear thinking and organized knowledge.

Let’s dive in.

If You’re Short on Time

Most people use AI like a hotel room: every conversation starts fresh. This article is about switching to an “apartment” model, where AI already knows who you are, how you think, and what you’re building.

Context compounds. A folder of interconnected files travels with you. No more briefing the AI from scratch.

Agents as teammates. Specialized thinking partners, grounded in your context, ready when you need them.

Amplify, don’t outsource. AI accelerates your thinking. You bring taste and judgment.

Human skills are the differentiator. Tools will evolve. Pattern recognition, clear communication, and experimentation won’t.

The big idea: Your knowledge, structured and connected, becomes AI’s fuel. Feed it well, and it amplifies everything you do.

The End of Starting Over

Most people use AI like a search engine. They type a question, get an answer, and move on.

Every‘s CEO Dan Shipper has a better metaphor. Working with tools like Claude Code is like having your own apartment with AI, not a hotel room you start fresh in each time. The apartment remembers you, your preferences, and your routines. You walk in and it feels like home.

Claude Code runs on your computer, reading your files and building on previous sessions. The key is persistent context. Teach it something once, and it’s available forever. Context compounds.

There’s more under the hood: agents, skills, slash commands, to name a few. I won’t go deep into those here1.

Claude Code has tech and product people buzzing. Most talk is about developers shipping at speed and becoming curators more than coders. That's real and remarkable. But it's only part of the story.

Shopify CEO Tobi Lütke built a custom MRI scan viewer. Pietro Schirano analyzed his DNA for health insights. Teresa Torres runs her life with it. Others manage finances, automate daily briefs, and grow plants.

Soon these concepts will spread to more accessible tools. Playing with them now gives you an unfair advantage.

Your greatest asset isn’t just what you know anymore. It’s the systems you build to amplify it.

Let me tell you how I got here.

Building a Living System

Since I started my GenAI journey, I've been intentional about how I manage my content. But Claude Code takes this to a different level.

The difference is that Claude Code reads these files automatically. It remembers yesterday’s conversation, spins up multiple thinking partners at once, and adapts to how I think. No fresh briefing required

Over the past months, I built a collection of files that live in a single folder and travel with me wherever I go. It’s not an app. It’s not software in the traditional sense. It’s something more like... a detailed instruction manual for how to think like me.

This entire system lives in a single folder. I can copy it to a new computer in seconds. I can share pieces with collaborators. I can use it with any tool that fits the moment.

I won’t list every use case or give you a technical walkthrough because there are endless resources for that. Think of it as a toolbox: what you build depends on your imagination.

It Started with a Simple Question

What if the AI actually knew me?

Not just my name or job title. How I think. What I’m building. The primitives I hadn’t fully articulated yet.

I started dumping ideas, docs, and random thoughts into Claude Code and asked it to help me make sense of it all. To ask me questions. I answered. It asked follow-ups. We kept going.

Slowly, the primitives emerged: building blocks I’d been using without naming. I captured them in linked documents that referenced each other. Each one made the others stronger, and the set kept growing.

The more I built, the more Claude understood. Not because it was learning magically, but because I was creating structured, connected, persistent context that made my thinking visible and reusable.

From Documents to Teammates

Here’s where it gets interesting.

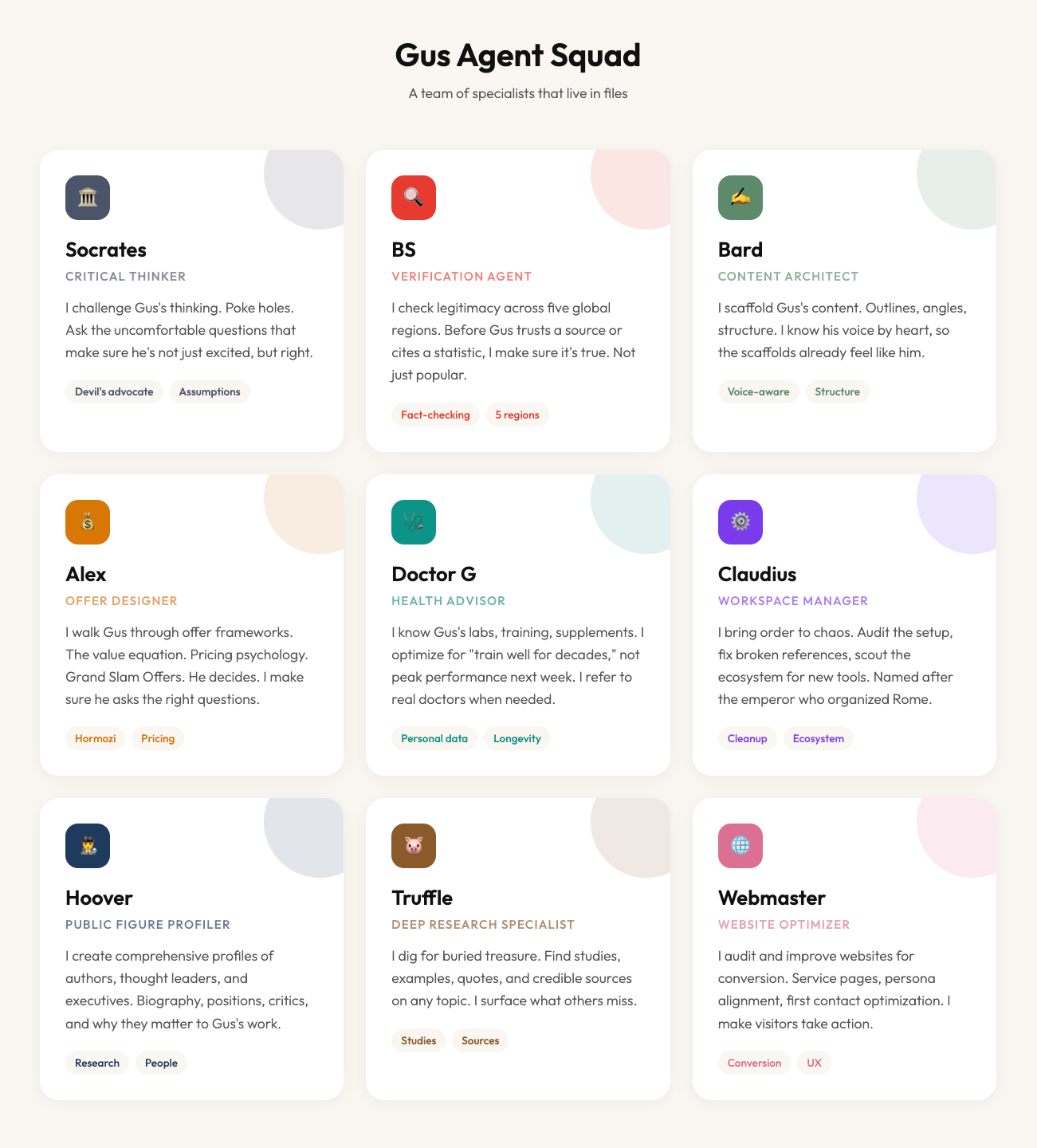

Remember those agents I mentioned earlier? Once I had my context and voice encoded, the next step was building them. Each is a specialized thinking partner that knows how to approach specific jobs like I would.

Think of it like assembling a team. Except the team lives in files, and every member has read the same instruction manual about who I am, how I think, and how I act.

I now have many agents and asked some to introduce themselves to you.

Each agent has its own instruction file. Some are 500 lines, some 900. They include frameworks, checklists, examples, and explicit instructions for their domain. The output is always mine to define.

It gets better. I can ask them to discuss with each other, chain them into workflows, work as a team. My hypercurious brain finally has a crew ready to dive down any rabbit hole with me.

What This Actually Looks Like

It’s easy to stay theoretical with this stuff.

Let’s make it real. Here is a quick story from last month.

I was deep in a redesign of the Fixed to Flow website using a Claude Code plugin called Frontend Designer, playing with typography, layout, and visual rhythm.

I used to design websites, then led design teams. The taste stayed, the hands didn’t. But with AI handling execution, I could move absurdly fast. Knowledge plus taste plus AI equals flow.

At the same time, something nagged me. I knew there was a missing service in my offer. Something more accessible. An entry point for people who weren’t a good match for the mentoring track. I kept turning it over in my head, but nothing clicked.

Then I matched with Jo on The Breakfast. Our first meeting. Within five minutes, we were deep in the weeds, geeking out over the same questions: How do we amplify ourselves with AI? How do we scale impact without losing the personal touch?

She told me about a highly personalized service she’d built. I asked how many clients she could handle per month, given everything else on her plate.

“Seven,” she said. That number stuck with me. Not because it was the right number for me, but because it represented a service designed around genuine, personalized attention. She’d found the balance between reach and depth.

Later that day, I sat down with Claude Code. This time, I wasn’t starting from scratch. Claude already knew things about me I’d been encoding over weeks.

I said: “You know me. You know where I add more value, how I work. I want to create a service that’s highly personalized and high-impact, but doesn’t consume all my time. Something that lets me charge less and reach more people. An entry-level offer. What could that look like?”

We explored the idea from different angles. One agent helped me with structure and pricing. Another challenged my assumptions. Another helped me with positioning and communication.

That was the origin of the AI Amplifier.

The final service came entirely from me. It was built from my experience, experiments, and instincts. But the thinking process was richer because I wasn’t starting from zero. I was building on weeks of accumulated context.

The difference is that the AI doesn’t produce the work; it accelerates the thinking for better work.

The Deeper Shift

To teach AI my thinking process, I had to understand it first. It was like explaining your job to a newcomer and realizing how much you do on autopilot.

Creating agent instructions clarified my workflows. What happens when I design an offer? What questions do I ask myself? What frameworks do I rely on?

Defining my services for the system forced me to get honest. I had to answer questions I’d been avoiding: What value do I provide? Who do I want to work with? What trade-offs am I willing to accept?

The system became a mirror. Not just for AI to understand me, but for me to understand myself.

Keeping it Human

All bells and whistles so far, right? But here’s the danger I’ve been warning about: when AI gets this good, it’s tempting to outsource thinking. Let it make the decisions. Let it handle the complexity. That’s how you lose what makes you valuable.

Context doesn’t replace thinking. It amplifies it, but only if you stay in the loop.

The work is still work. You’re thinking faster instead of staring at a blank page. You’re making decisions instead of getting stuck on execution. It’s not hands-off. It requires active engagement and clear taste.

The deeper I go into AI, the more I protect time away from screens. I start and end every day with pen and paper. Handwriting keeps thinking grounded.

Remember: you set the boundaries. You provide the judgment. You decide what’s good enough and what needs another pass. AI handles execution. You handle discernment.

Stop doing that, and you become just another cog in the machine. Replaceable. Irrelevant.

Human in the loop isn’t just a safety phrase. It’s a design principle.

What Lasts When Tools Don’t

I love Claude, but another AI lab might launch something better tomorrow. The underlying concepts, though, are durable.

Keep these in mind:

Context is everything. Generic prompts get generic answers. Rich context gets something that fits. Invest in context files.

Connected knowledge compounds. One document helps. Three referencing each other are powerful. Ten are transformational. It’s about connections, not volume.

Structure enables leverage. Purposeful folders. Clearly named and structured files. Think in systems, not one-off prompts.

Your context should outlive any tool. Models and interfaces will change, but clear, connected, structured files travel with you.

Build it once, and plug it into whatever comes next.

What Schools Never Taught Us

The barrier to AI adoption isn’t technical. Most people can learn to use Claude or ChatGPT in an afternoon. The real barrier is what comes after digital fluency: the human skills needed to think clearly, structure work, and make good judgment calls.

Some people have these naturally: developers, strategists, architects, researchers. Anyone who’s organized complex work across time and people develops some version of this. They think in building blocks and see how pieces connect.

But most people weren’t taught to think this way. School taught them to consume information, not structure it. To follow instructions, not build systems.

That’s the missing link. Not prompts, tools, or technical skills.

I’m focusing on a specific subset of human skills. Other important skills include critical thinking, creativity, emotional intelligence, and ethical judgment. Some are meta-level. The ones I want to focus on are the operational skills used daily when building systems like this.

At the core, there are six:

Pattern recognition is noticing what repeats. Seeing that this problem resembles one you solved before, even when details differ. Extracting the general from the specific.

Symbolic thinking is seeing how concepts, objects, and people relate, using abstractions to represent what isn’t physically present. Connecting ideas across different domains.

Taste is knowing when something is good. When an AI output feels right versus off. When a structure is elegant versus clunky. It’s a skill that improves with practice.

Experimentation is the courage to try things before you fully understand them. To build something small and see what happens. To treat your work as personal experiments, not a single high-stakes performance.

Clear communication is making your thinking legible to others (and to AI). Stating the goal, the context, and the constraints in plain language, then checking that what came back matches what you meant.

Management is the one skill here you might have learned formally. Defining success, breaking work into pieces, checking if it's good, giving feedback when it's not. Every profession has its own formats. Product specs, shot lists, design briefs. The twist is that now you're also delegating to machines.

These skills are learnable. You don’t need a computer science degree or years of training. You need practice: building things, noticing what works, adjusting. Training accelerates this, but the real learning happens in the doing.

That’s what turns AI from a tool into a partner that amplifies your thinking.

The technology will change, but these skills won’t go out of style.

Your Move

These systems are getting smarter every day. Context about you is being built whether you participate or not.

The question is: who owns that context? If you don’t curate your own thinking, you become a passenger. When your context lives in files you control, your thinking travels with you.

Use whatever tool fits: Claude Code, Claude Cowork, ChatGPT, Gemini. Any assistant can deliver great results with the right context. The focus should always be the value these tools unlock for you, not the tools themselves.

Some people figure this out on their own. Others want a mentor to guide them. Both paths are valid. But whatever route you choose, make it personal. Focus on your use cases, your workflows, your context.

The goal isn’t to learn AI in the abstract. It’s to amplify what you already do.

If this got you thinking, drop me a line and tell me what you’re wrestling with right now.

Stay in the loop,

Gus

If Claude Code feels too technical, Anthropic just launched Claude Cowork, Same principles, less powerful, but an easier entry point.

I had a really good chuckle at the names of your agents. Truffle takes the cake. 😅